Lighe field imaging is a kind of emerging imaging technology.

Reconstructing indoor light fields under complex illumination conditions is crucial for high-demand applications such as entertainment performances and sports events.

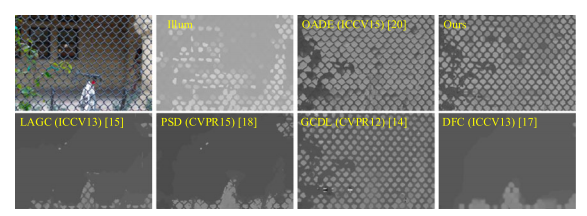

Occlusion-Model Guided Anti-occlusion Depth Estimation in Light Field

Hao Zhu, Qing Wang, Jingyi Yu

IEEE JSTSP, 11(7):965-978, 2017

Paper |

Code |

BibTeX |

Github

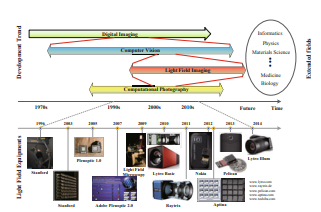

Light Field Imaging: Models, Calibrations, Reconstructions, and Applications

Hao Zhu, Qing Wang, Jingyi Yu

FITEE, 18(9):1236-1249, 2017 (2017.10.27)

Paper |

Code |

BibTeX |

Github

"The man can be destroyed but not defeated。" - Ernest Miller Hemingway